Description

Fancy Having 100 Data Entry Assistants working in your Office 24/7?

Most of us have hired data entry assistants to run search engine, business directory or social media searches and then copy and paste all data into a spreadsheet. However, web scraping and data entry professionals are expensive, slow and simply prone to human errors. One of our clients has compared the CBT Web Scraper and Email Extractor to having 100 web scraping and data entry assistants working in your office 24/7 at a fraction of the price. This defines the software very well because just as you give instructions to data entry professionals when you hire them, you can also give the software instructions via the settings area.

Cut Costs and Tap Into New Business Opportunities During Covid-19 Crisis

Many businesses around the world have been forced to close down as a result of economic challenges brought about by the coronavirus pandemic. As a business, it has never been more important to operate a more streamlined model. Our software will help you to save money, generate business leads at lightning speeds to meet even the tightest of deadlines and have the same output as you would with hundred data entry assistants at a fraction of the cost.

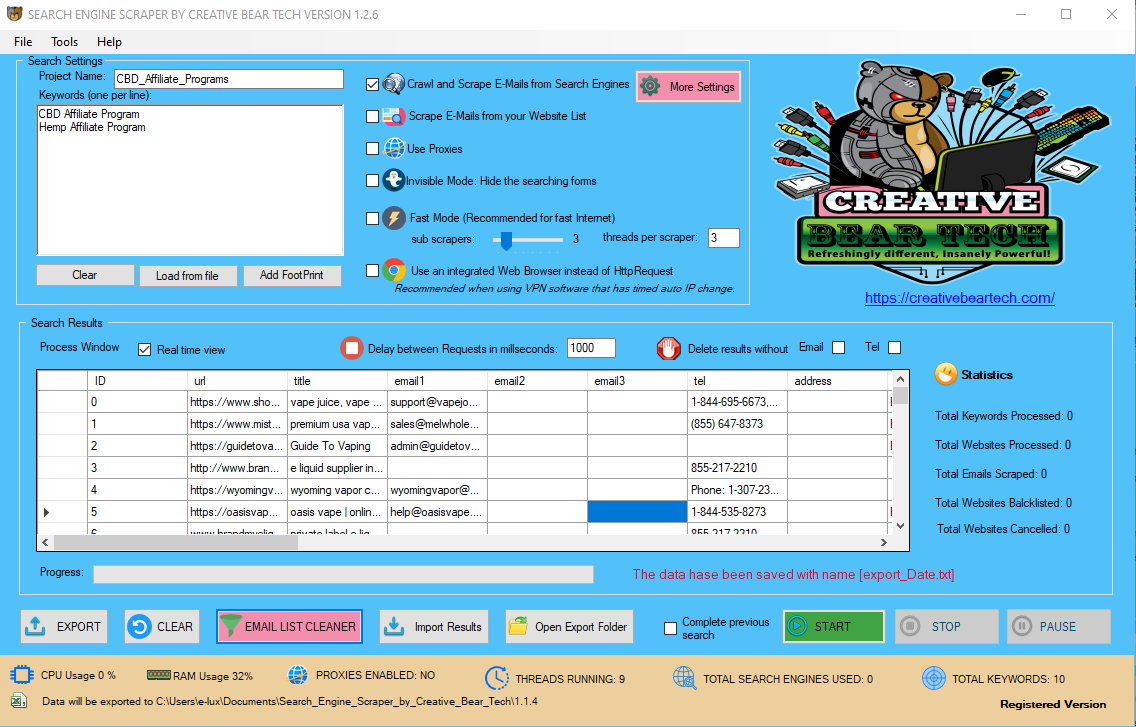

A Quick overview of The Search Engine Scraper by Creative Bear Tech and its core features.

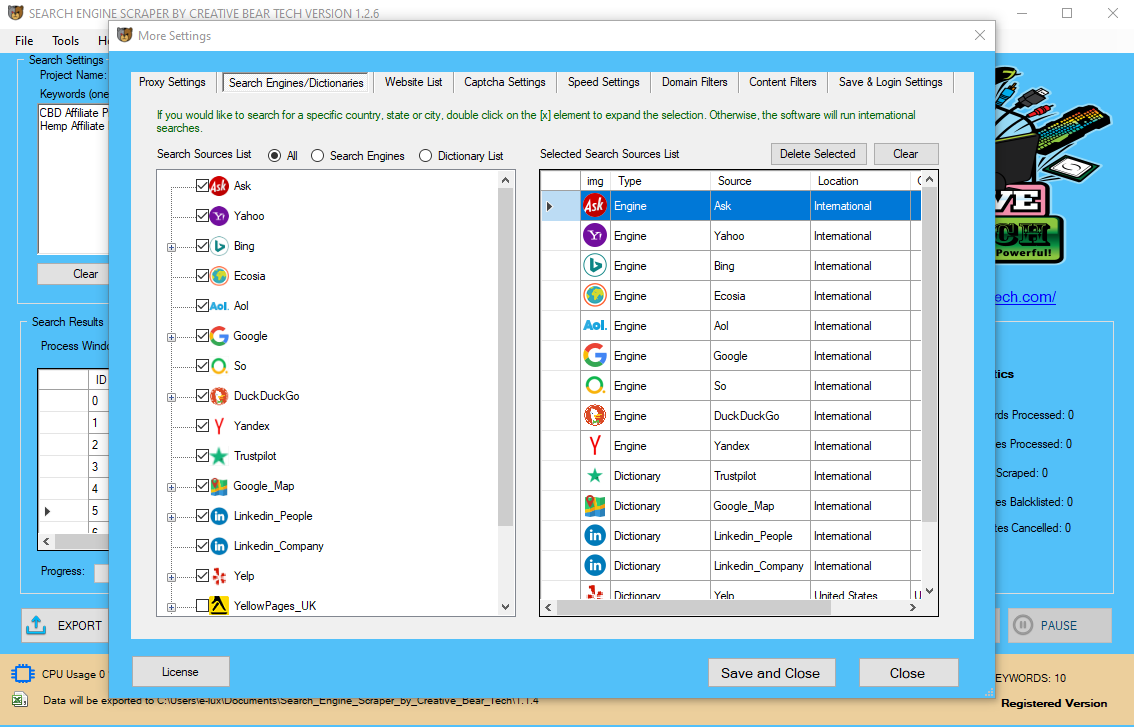

Our Search Engine Scraper is a cutting-edge lead generation software like no other! It will enable you to scrape niche-relevant business contact details from the search engines, social media and business directories. At the moment, our Search Engine Scraper can scrape:

- your own list of website urls

- Bing

- Yahoo

- Ask

- Ecosia

- AOL

- So

- DuckDuckGo!

- Yandex

- Trust Pilot

- Google Maps

- Yelp

- Yellow Pages (yell.com UK Yellow Pages and YellowPages.com USA Yellow Pages)

- Facebook and

That's a hell of a lot of websites under one roof! The software will literally go out and crawl these sites and find all the websites related to your keywords and your niche! You may have come across individual scrapers such as Google Maps Scraper, Yellow Pages Scraper, E-Mail Extractors, Web Scrapers, LinkedIn Scrapers and many others. The problem with using individual scrapers is that your collected data will be quite limited because you are harvesting it from a single website source. Theoretically, you could use a dozen different website scrapers, but it would be next to impossible to amalgamate the data into a centralised document. Our software combines all the scrapers into a single software. This means that you can scrape different website sources at the same time and all the scraped business contact details will be collated into a single depository (Excel file). Not only will this save you a lot of money from having to go out and buy website scrapers for virtually every website source and social media platform, but it will also allow you to harvest very comprehensive B2B marketing lists for your business niche.

How Our Search Engine Scraper and Email Extractor Can Help Your Business

Our website scraper is ideal for all types of businesses that sell to wholesale customers. Instead of purchasing stale and dirty marketing lists, you can now generate your very own B2B leads whenever you need to. Our website scraper simply connects the dots between your business and your prospective B2B clients. For example, if you are a CBD brand that let's say manufactures CBD oil and gummies then you will need to promote and sell your CBD products to all the CBD and vape shops around the world. It is a no-brainer: as a wholesale business, you are always selling products to other businesses and luckily, most of the B2B data can be found online from different website sources (unlike B2C data which is a legal hot potato). The problem with scraping B2B marketing lists with other web scraping tools is that they tend to produce very limited sets of results as those scraping tools are usually limited to a single website source (i.e. Google or Yellow Pages). Equally, most of scraping tools have a tendency to scrape a lot of junk and irrelevant data entries. We have used over a dozen scraping tools, which enabled us to understand all the problems and address them. Instead of releasing individual website scraping tools, we have decided to make everything as easy as possible for the end user by giving you the maximum flexibility to scraping whatever platforms you want.

What Makes Our Website Scraper the Most Powerful Software for Generating Custom B2B Marketing Lists

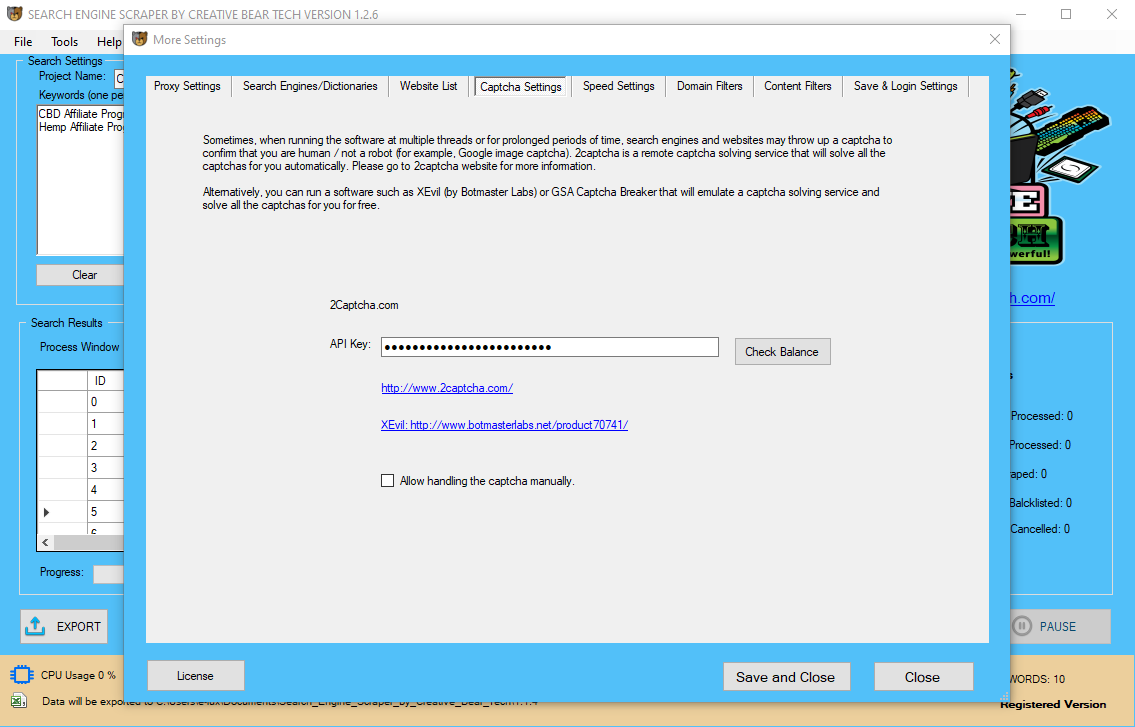

AUTOMATIC CAPTCHA SOLVING: AVOID IP BANS AND ANNOYING GOOGLE I AM NOT A ROBOT CAPTCHAS

The software has an integrated remote captcha-solving service that will automatically solve any type of captcha asking to confirm that you are not robot. This usually happens when you do a lot of scraping from a single IP address. You can even connect external tools such as Xevil and GSA Captcha Breaker software to solve captchas for FREE. The software will automatically send all the captchas to be solved by 2captcha remote captcha solving service or XEvil (if you have it connected). This will help you to scrape marketing lists without any interruptions.

THE SEARCH ENGINE SCRAPER NOW SUPPORTS PUBLIC PROXIES!

Starting from version 1.1.4, the Search Engine Scraper now supports public proxies. You can simply load your public source urls and the software will automatically scrape each url for proxies and then test them and remove non-working proxies. We provide a massive public proxy list inside the software so you do not have to worry about finding public proxy sources. The software will automatically test all the public proxies at specified periods and remove all non-working proxies for uninterrupted scraping. If you are going to be running the scraper using many threads, it is important to have either public or private proxies. Do note: public sources are free but they are less reliable and may be slower than private proxies.

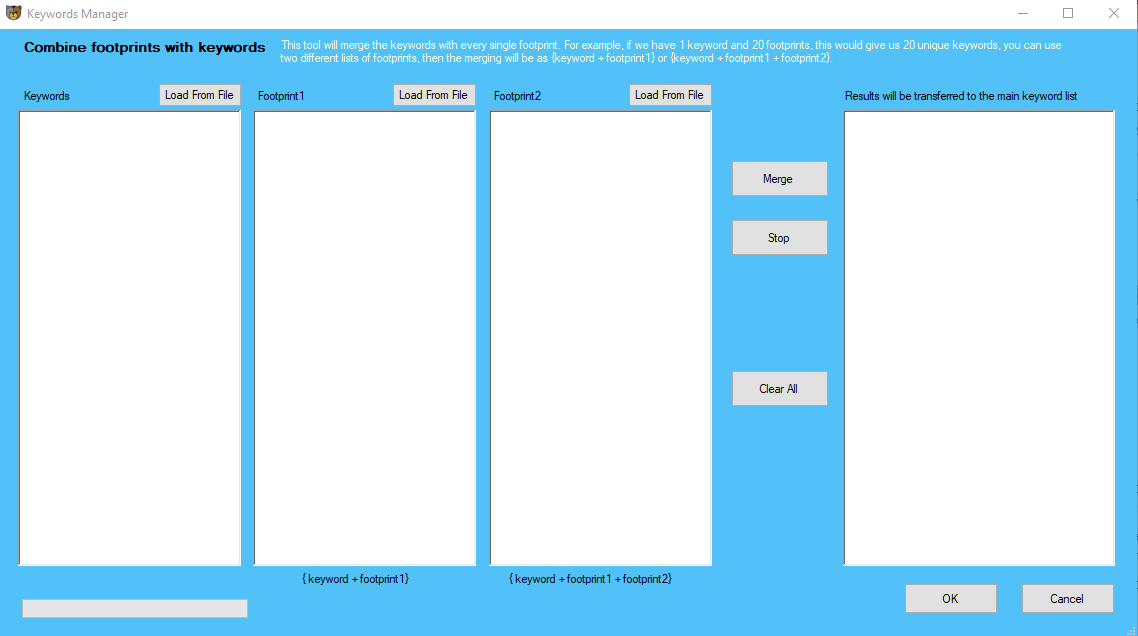

THE SEARCH ENGINE SCRAPER NOW has a simplified and more advanced footprints option

Starting from version 1.1.4, we have simplified the footprints configurations. Now, you are going to have 3 separate text fields: 1 field for your root keywords and 2 text fields for your footprints. We have added two text fields for footprints because some users may want to use more complex footprints. For example, you could have the following combination:

Keywords: women's apparel

Footprint 1: wholesale

Footprint 2: Los Angeles, San Francisco, Miami, New York, Washington, Dallas.

Once you have entered your footprints and the keywords, they will be automatically transferred to the main keywords box. Our footprints option is extremely popular with SEO marketers in helping them to find niche-related websites that accept guest posts. This guest posting link building practice is one of the most important and "white hat" SEO practices that helps a website to acquire organic rankings in the SERPs. Inside the software folder, we provide our very own set of footprints for guest posting. All you have to do is load the keywords and the footprints. The scraper will then search every keyword with every footprint and help you to scrape your own list of niche-targeted websites that accept guest posts.

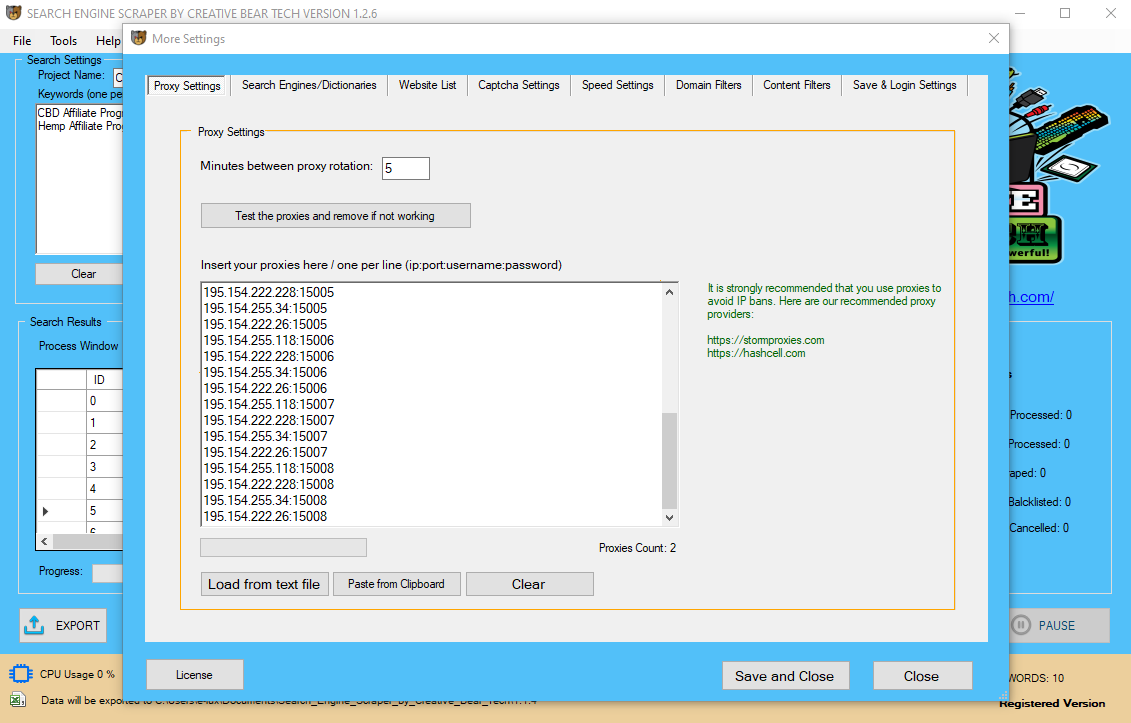

AVOID IP BANS USING PRIVATE DEDICATED PROXIES AND EVEN VPN SOFTWARE

The Search Engine Scraper supports private proxies and has an in-built proxy testing tool. If you run too many searches from a single IP address, many search engines and other website sources will eventually throw out a captcha to confirm that you are a human or in the worst case scenario, blacklist your IP which will mean that your scraping is dead in its tracks. Our website scraping software supports private proxies and VPN software to allow seamless and uninterrupted scraping of data. We are presently working on the integration of public proxies to make your scraping efforts even cheaper. It is important to use proxies (especially if you are running the software on many threads) for uninterrupted scraping.

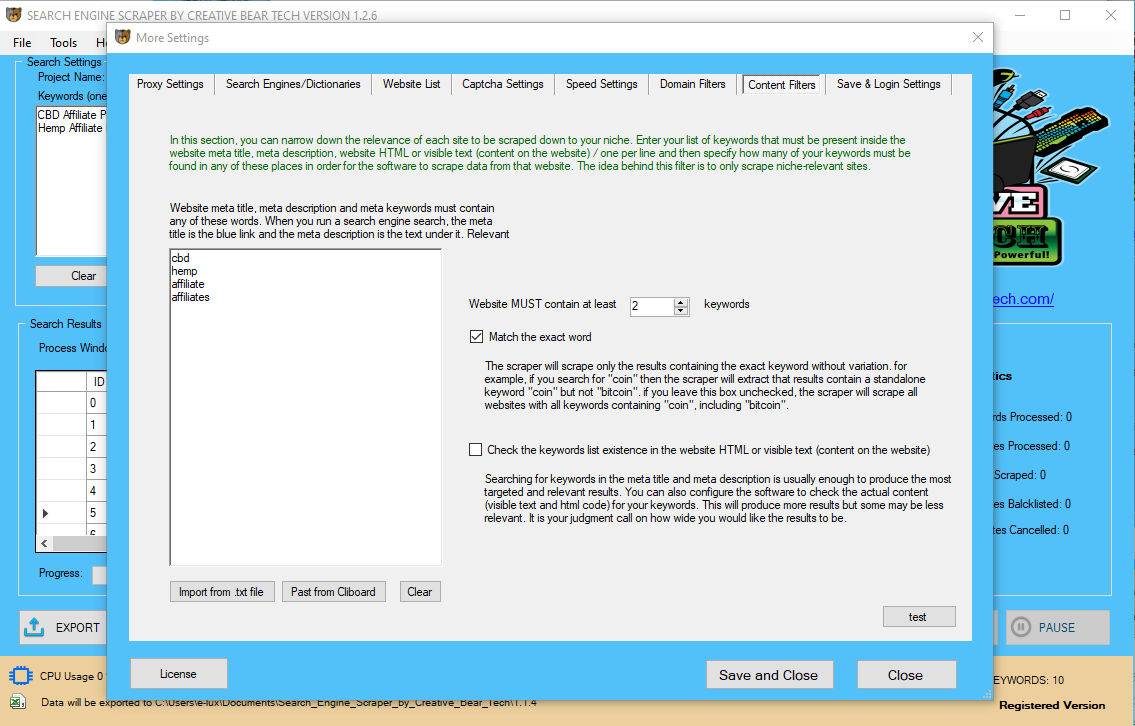

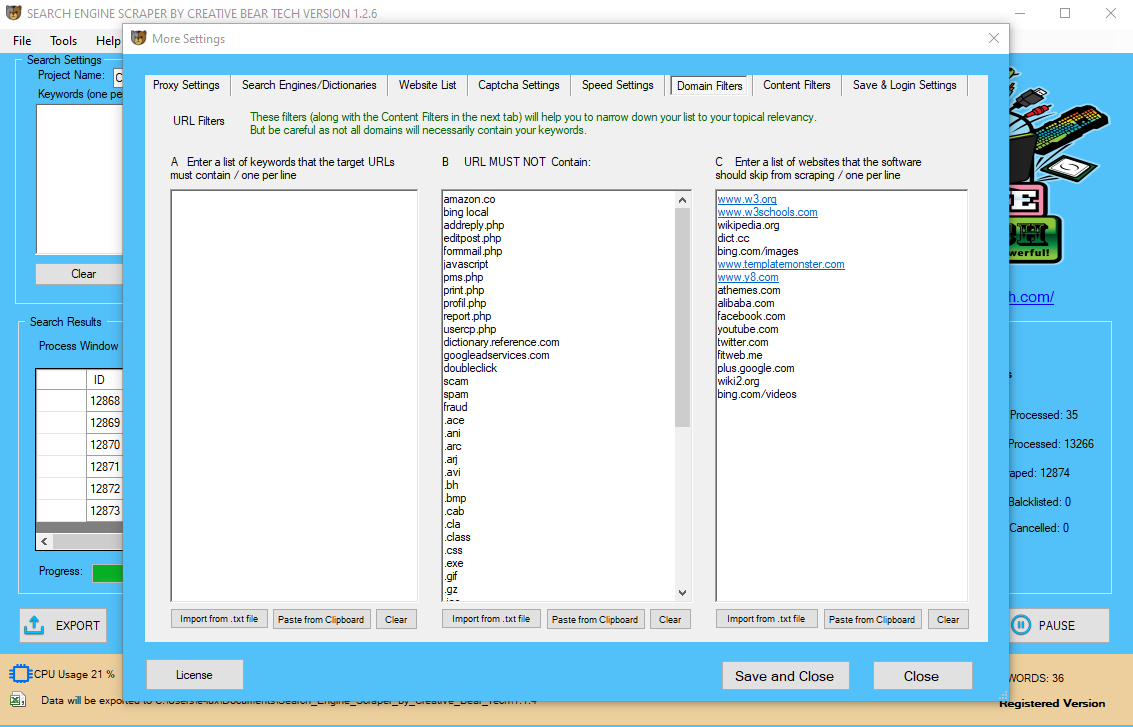

say goodbye to junk and spam! Scrape only niche-targeted and relevant marketing lists

Our website scraping tool has a set of very sophisticated "content" and "domain" level filters that allow for scraping of very niche-targeted B2B marketing lists. Simply add your set of keywords and the software will automatically check the target website's meta title and meta description for those keywords. For example, if you want to scrape the contact details of all the jewellery stores, you could add keywords such as jewellery, jewelry, jewelery, jewelers, diamonds and so on because by default, most businesses selling jewellery will have this keyword and its variations either in the website's meta title or meta description. If you want to produce a more expansive set of results, you can also configure the software to check the body content / HTML code for your keywords. The domain filter works very similarly save for the fact that it only checks the target website's url to make sure that it has your keywords. The domain filter is likely to produce less results because a website's url may not necessarily contain your keywords. For example, there are many branded domains. You can tell the software how many target keywords a website must contain. As you can see from the screenshot above, the scraper is configured to collect websites that contain at least one of our cryptocurrency-related keywords. We have not checked the second box because we want to keep our results as clean as possible. A website that contains cryptocurrency-related words in the body or the html code is less likely to be very relevant to the blockchain niche.

Generate comprehensive and complete marketing lists using multiple website sources

We have used many different scrapers in the past, but we had one issue: the scrapers would only scrape one source: social media platform, a business directory, google maps or a search engine. The problem with this limitation is that we could not produce one master set of very comprehensive results. Our software developers have added multiple website sources to the software which means that you can scrape many platforms simultaneously. Presently, the website harvester can scrape and extract business contact details from Google Maps, Google, Bing, Yahoo, Yandex, DuckDuckGo!, AOL, Facebook, Instagram, Twitter, LinkedIn, Trust Pilot, Yellow Pages (UK and USA), Yelp and other sources. This means that you will be able to generate one master file of B2B leads that is both complete and comprehensive.

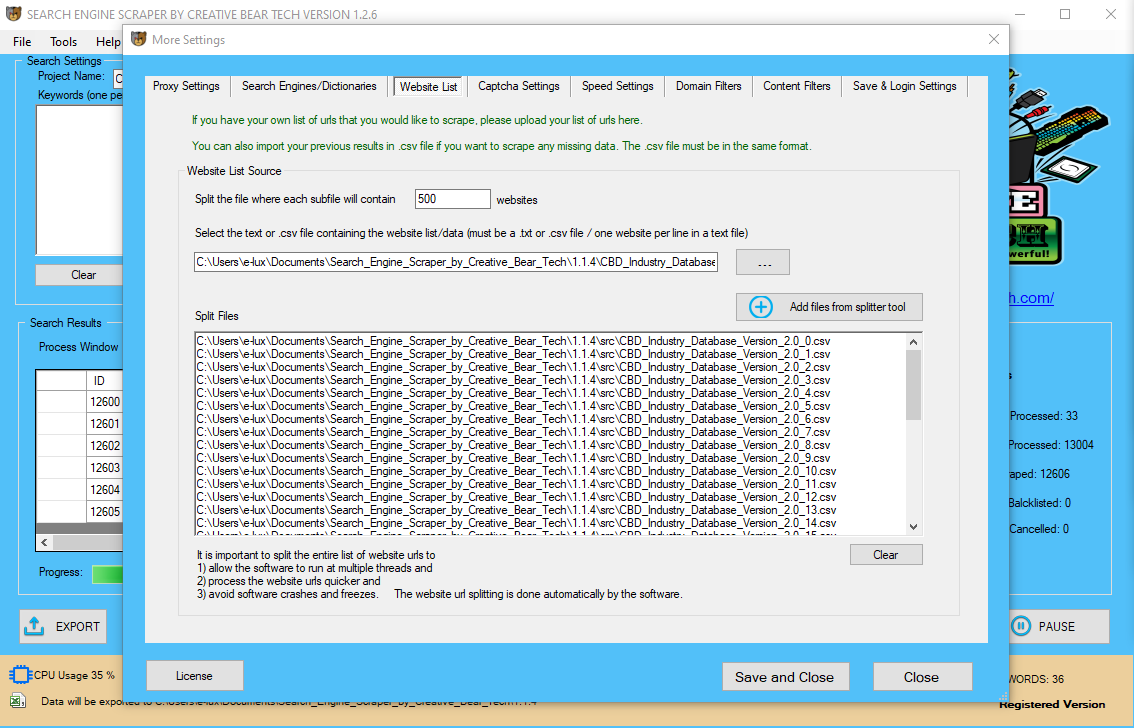

HAVE YOUR OWN LIST OF WEBSITES THAT YOU WOULD LIKE TO SCRAPE?

The software allows you to scrape your own website list. If you have a long list of websites, the software will even break the list down for you and process them in different chunks to speed up the scraping and data extraction progress. Simply upload your website list in a notepad format (one url per line / no separators) and the software will crawl every site and extract business contact data from it. This is an advanced feature for people who like to scrape their own sets of websites that they have harvested with other website scraping tools. Likewise, you can also upload a .csv file with previous results. You can either scrape for any missing data inside your existing results database or scrape new data on top of your results.

INCREASE THE SCRAPING SPEED USING MULTIPLE THREADS

Depending on your computer specs, you can run the software at multiple threads to increase the speed of scraping.

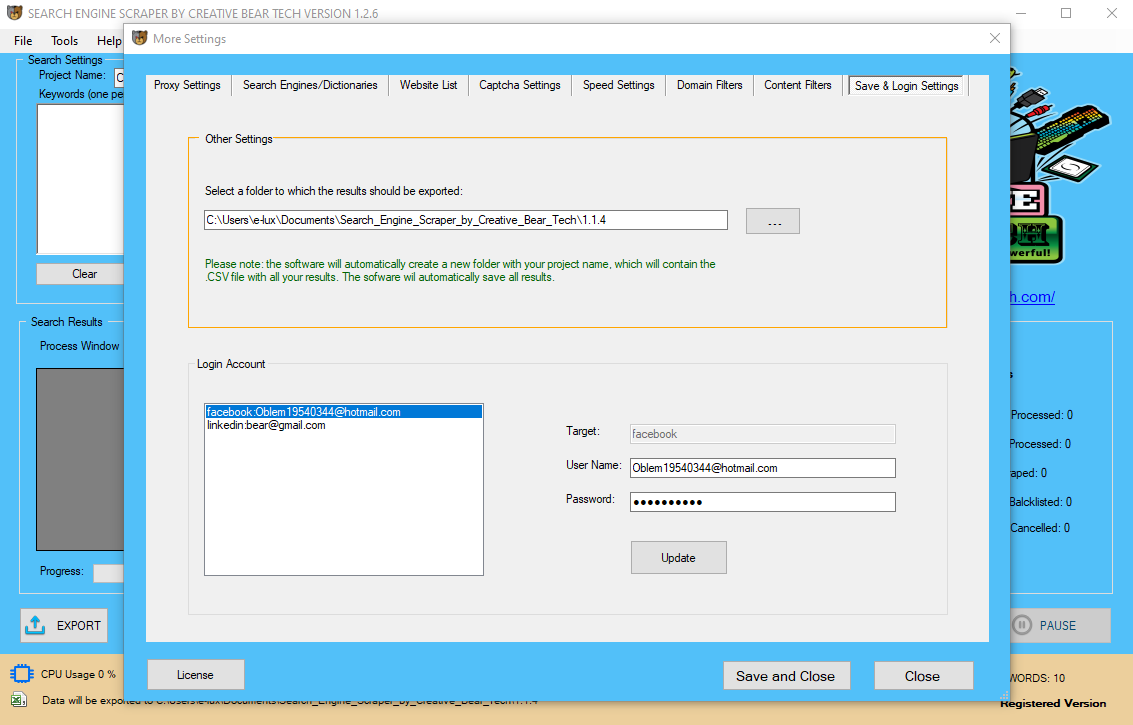

The website scraper will automatically create a results folder with the file

Once you have named your project, you will need to go to the settings tab and select the path where the results should be saved. As soon as you start to run the website scraper, it will create a folder with your project name and inside that folder, it will create an Excel file in .csv format with your project name. The scraper will then auto save all the results in that file. Under the save and logins settings tab, you will notice that you have an option to enter your Facebook and LinkedIn login details. When the software cannot find some contact details for any given business, it will go the Facebook, Instagram, Twitter and LinkedIn pages to see whether it can locate some of the missing contact details. Sometimes, Facebook requires a user to login in order to view the business page contact details and on other occasions, it does not require a user to login. We have added this Facebook login feature to maximise the success rate. To scrape LinkedIn, you will need to add your login credentials. Your Facebook account will be accessed using your local IP address. DO NOT use a VPN because this will cause for your Facebook account to become restricted. The scraper will access Facebook business pages at a single thread and using delays to emulate real human behaviour and to keep your Facebook account safe.

auto save feature

By default, website scraping can take a fairly long time if you are scraping many websites and website sources. There is nothing worse than losing all of your scraped data in case of a computer crash. We have used many website scrapers and email extractors before and most of them did not have a feature that could allow us to resume our scraping process in case of a crash: we had to start from scratch. Our software developers have added a very cool feature that will allow you to resume your search in case of a system crash or simply if you want to close your laptop and resume your search later. The website scraper will automatically pick up from where it left off! It will even use your previous software configurations.

Speed Settings

Under the speed settings tab, you can select the total number of websites to be parsed per keyword. There is an element of inverse correlation to this setting: if you select more search results to parse per keyword then the website scraping process will take longer but the results will be more comprehensive. If, on the other hand, you choose to parse less websites per keyword then your results will be less comprehensive but the scraping time will be shorter. It is therefore important to consider how many keywords you have in total and the sources that you are using. Sometimes, you may not want to extract more than any given number of emails from a single website. This could include forums. You can tell the web scraper the maximum number of emails to extract from the same website and never crawl more than X number of emails from the same website. There is also an option not to "show pictures in integrated web-browser". This option will help to speed up the scraping process. Recently, we have added two options to "enable application activity log" and "enable individual threads activity log". The purpose of these logs is to have them just in case something goes wrong so that we can investigate and resolve the issue. Of course, having both logs enabled will slightly reduce the speed of the website scraper as the harvester will be constantly saving data to these logs. Nonetheless, it is recommended to have them enabled.

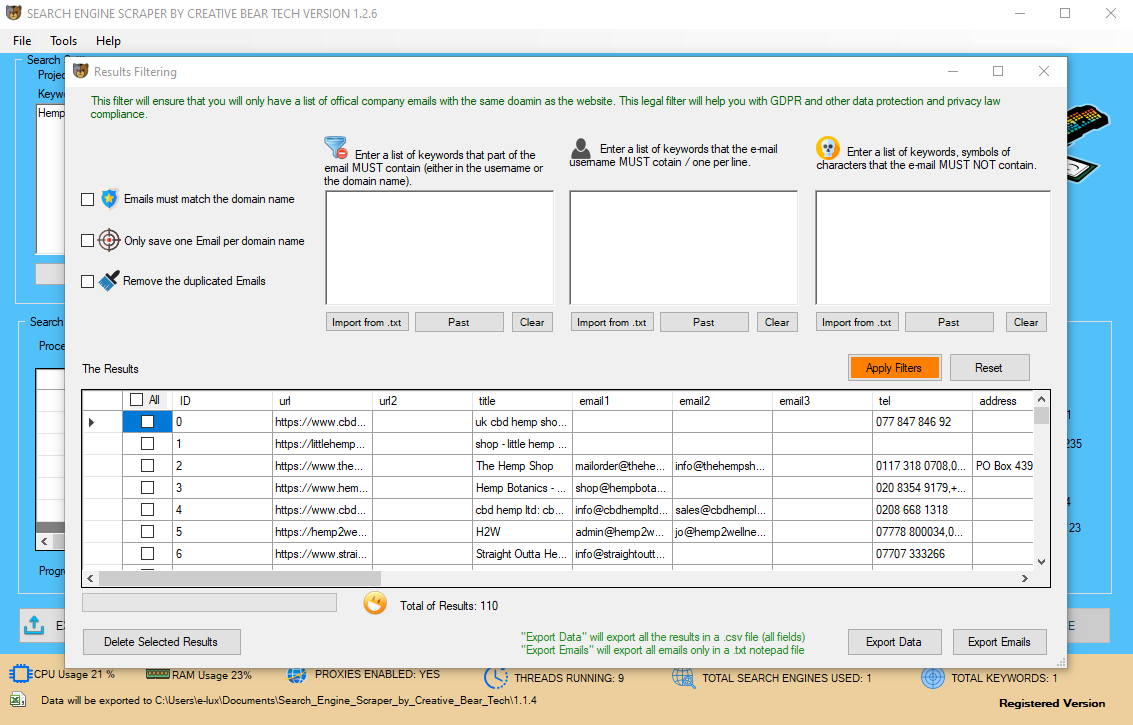

SPRING CLEANING: CLEAN YOUR ENTIRE LIST ONCE THE SCRAPING IS COMPLETE

Once the software has finished scraping, you will be able to clean up the entire marketing list using our sophisticated email cleaner. This email list cleaner is a very powerful feature that will allow you to weed out all the junk results from your search or even make your list GDPR compliant. For example, you could choose the "email must match the domain name" setting to only keep company emails and eliminate any possible private emails (gmail, yahoo, aol, etc.). You can also "only save one email per domain name" to ensure that you are not contacting the same website with the same message multiple times. By default, the software will remove all duplicate emails. You can apply a set of filters to make sure that the email username or domain name contains or does not contain your set of keywords. This is a very useful filter for removing potentially unwanted emails contain usernames such as name, company, privacy, complain and so on. The email list filter will then allow you to save and export data as well as export only emails (one per line).

I have barely scratched the surface of the ice! The Search Engine Scraper and Email Harvester by Creative Bear Tech is literally THE WORLD'S MOST POWERFUL search engine scraper and email harvester. When it comes to the functionality and artificial intelligence, this software definitely packs a real punch. Our tech wizards are working around the clock and have many updates lined up for this software. You now have the ability to generate unlimited marketing lists, guest post opportunities and pretty much everything else! We have created a very comprehensive step-by-step tutorial for this software. You can access the link in the description.

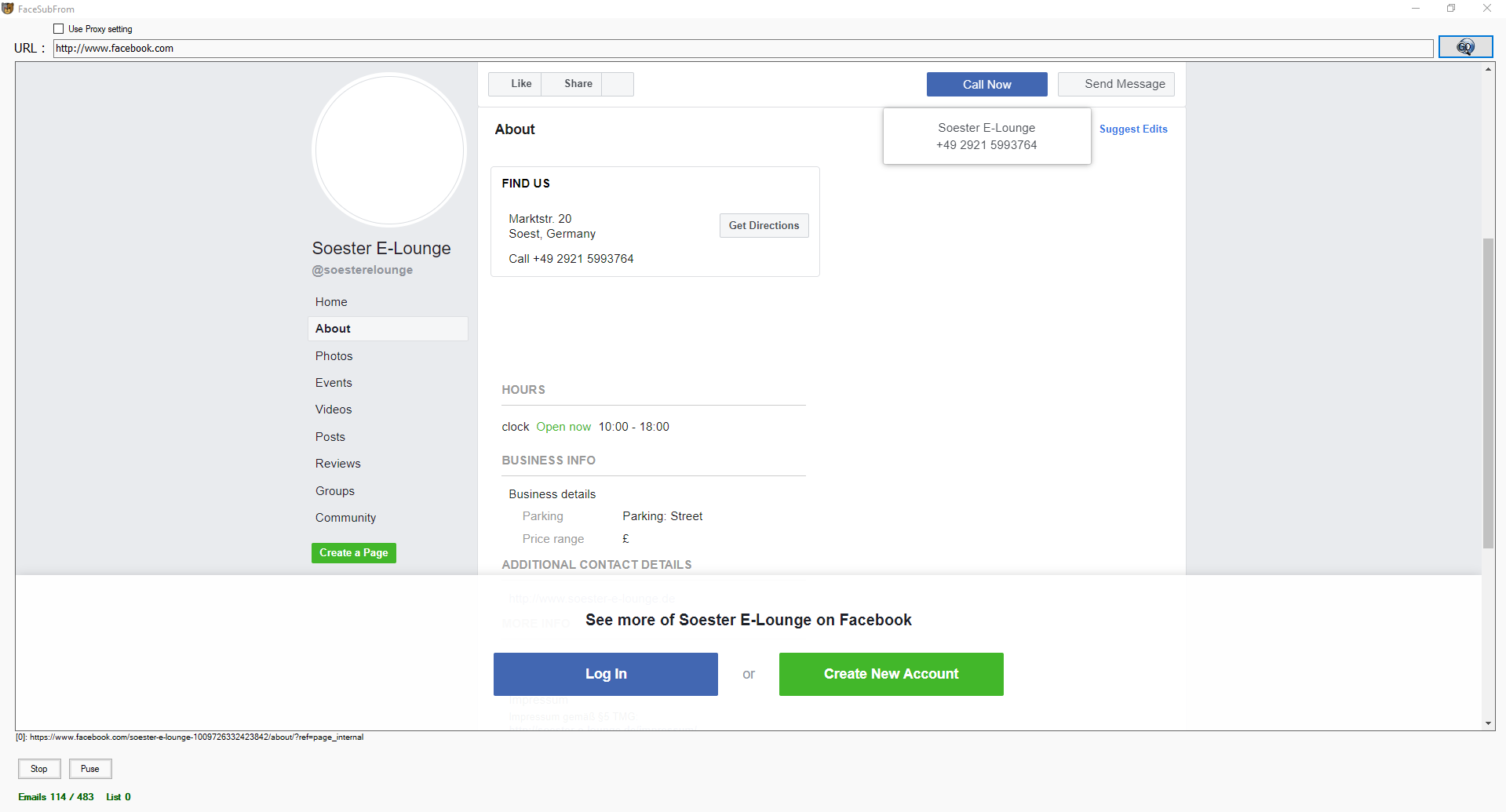

Twitter and facebook scraping: scrape complete business data from leading social media sites

By default, the search engine scraper will scrape business data from the website sources that you specify in the settings. This may include Google, Google Maps, Bing, LinkedIn, Yellow Pages, Yahoo, AOL and so on. However, it is inevitable that some business records will have missing data such as a missing address, telephone number, email or website. In the speed settings, you can choose either to scrape Facebook in case emails not found on the target website OR Always scrape Facebook for more emails. You can also scrape Twitter for extra data. Inside the Save and Login Settings tab, you have the option to add the login details for your Facebook account.

The website scraper is going to access your Facebook account using your local IP with delays to emulate real human behaviour. It is therefore important that you do not run a VPN in the background as it can interfere with your Facebook account. Sometimes, Facebook will not ask the bot to login and display all the business information whilst on other occasions, Facebook will ask the scraper to login in order to view a business page. The search engine scraping software is going to add all the target websites to a queue and process each website at set intervals to avoid bans and restrictions.

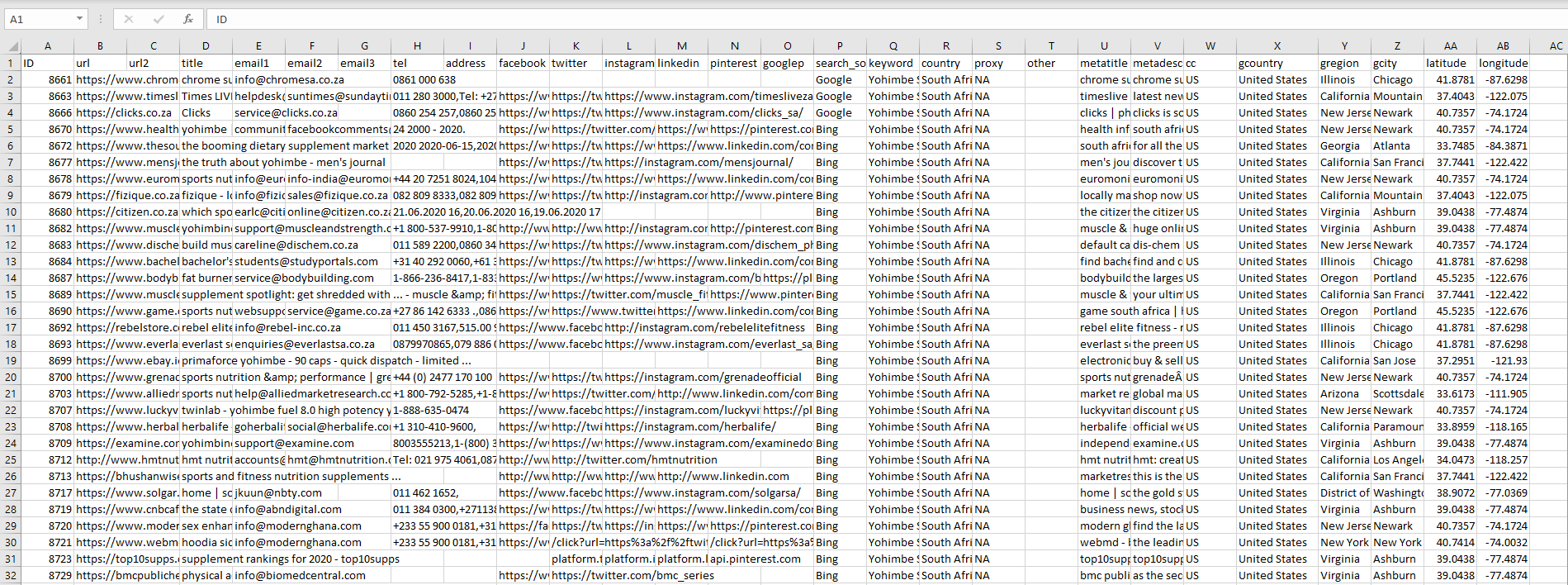

In version 1.2.2, we have added meta title and meta description fields and in version 1.2.5, we have added geo location data for every record (country, city and coordinates). Extra meta title and meta description fields will enable you to filter the results inside excel using your keywords. GEO location data will enable you to sort your scraped records by country and even city.

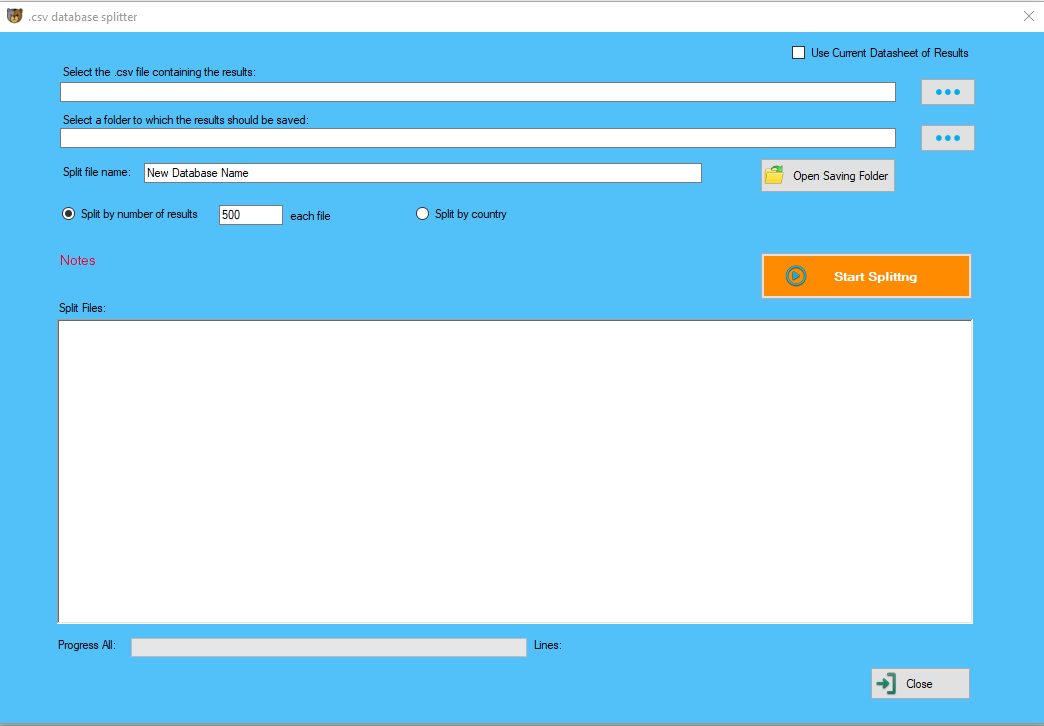

Split a CSV File into Multiple Files (NEW!)

In version 1.2.6, we have added an Excel spreadsheet .csv file splitter that will enable you to upload your scraped data (csv format) and split it into multiple Excel csv files either by 1) total number of rows/records per file or 2) by country. This Excel csv file splitter is ideal for splitting large Excel spreadsheet CSV files and segmenting your data on a country-by-country basis.

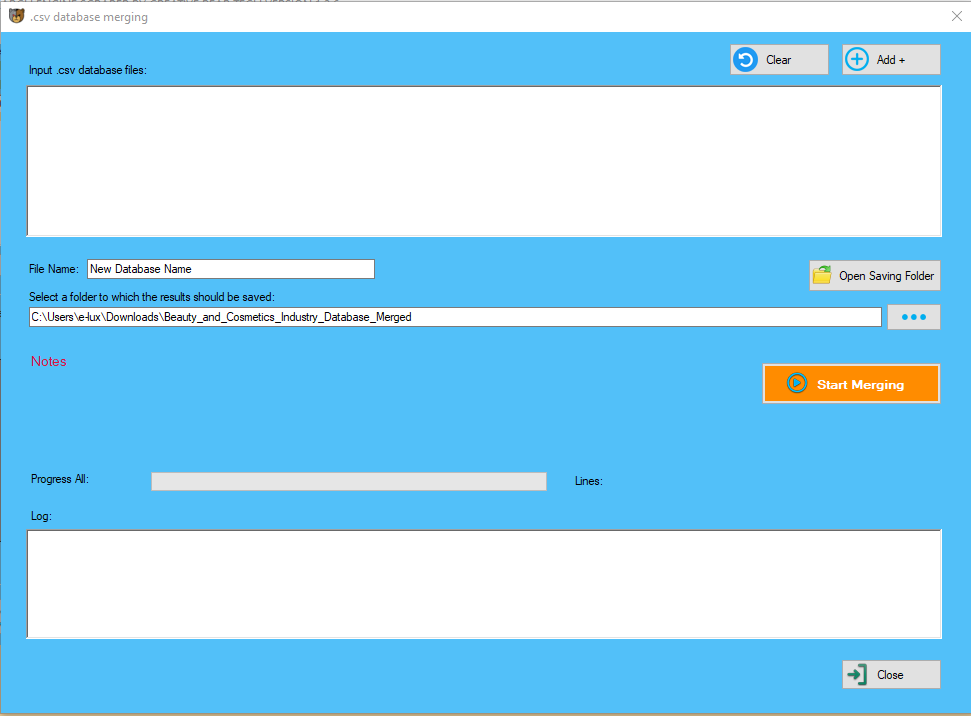

Merge Multiple CSV Files into One (NEW!)

In version 1.2.3, we have added an Excel CSV merger tool that will enable you to combine multiple .csv files into a single master database. This tool is ideal if you have scraped multiple databases and would like to combine them into a single file. The tool will also remove all duplicate entries.

Re-scrape Previous Results (NEW!)

In version 1.2.1, we have added a functionality that will enable you to upload your completed results and rescrape all the results in an attempt to fill out as much missing data as possible.

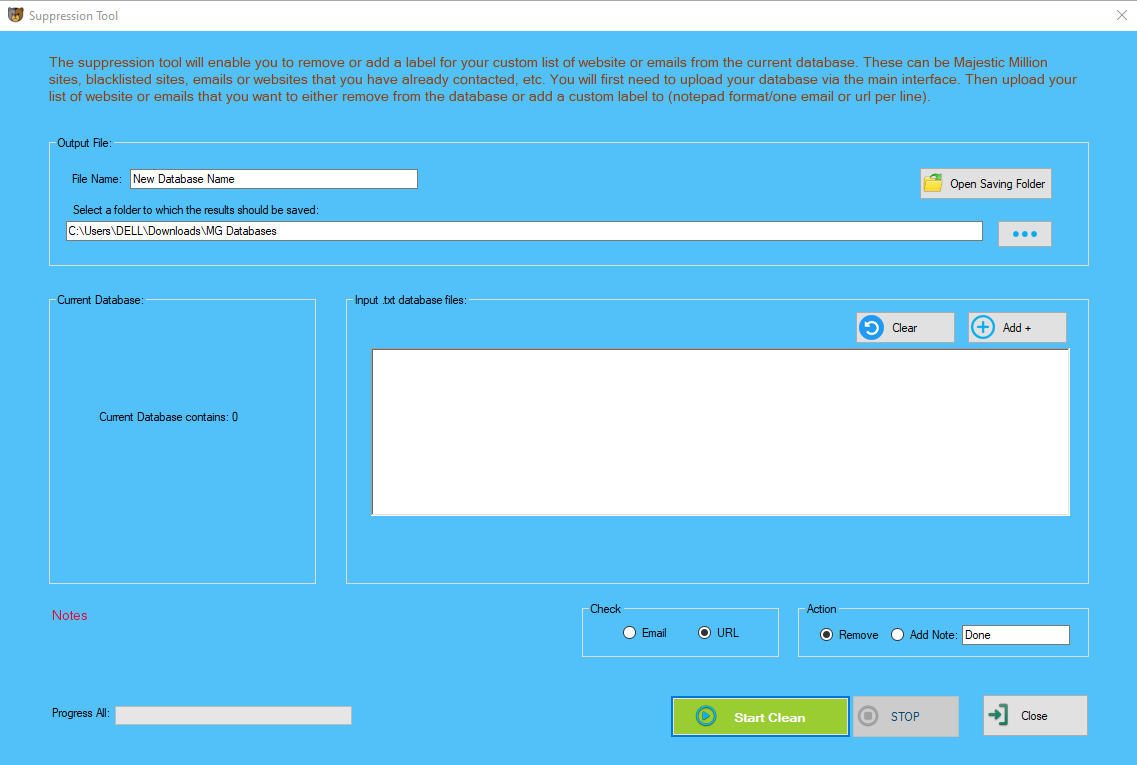

Suppression Tool (New!)

From version 1.3.3 onward, we have introduced a new Suppression Tool. The suppression tool will enable you to remove or add a custom label for your custom list of websites or emails from the current database. These can be Majestic Million sites, blacklisted sites, emails or websites that you have already contacted, etc. You will first need to upload your database via the main interface (Import results button - make sure that your .csv database has the correct headers). Then upload your list of website or emails that you want to either remove from the database or add a custom label to (notepad format/one email or url per line). In very simple term, the suppression tool will take your list of emails/websites and then check every record in the database for these emails/websites. You can either remove or add a custom label to records that contain emails/websites from your notepad list. This may be useful if you have a team who have already contacted many websites and to prevent an overlap, you can add a custom label to these records such as DONE. The custom label will appear inside the "comments" column. Alternatively, you can upload Majestic Million websites (not necessarily all but say top 50,000) to remove all the popular websites that are unlikely to be relevant to your mailing list.

How to Order

To order your copy of the software, simply check out and the software along with the licence key will be available in your members' area. All future updates will be uploaded inside your members' area. Please note: normally, the licence key and your username should be issued to your automatically by the system and should be accessible in your member area. However, in the event that your licence key is not issued automatically, please contact us Please allow at least 24 hours for us to get back to you. Thank you!

Read Our Guides

Here is a comprehensive and regularly updated guide to the search engine scraper and email extractor by Creative Bear Tech.

Guest Blogging for SEO - How to Find Websites that Accept Guest Posts using Our Scraper

How to Scrape Data from a Website with Website Scraper and E-Mail Extractor

Settings and configurations

How to Connect XEvil Remote Captcha Solving Software to the Website Scraper

How to Add your own Private, Shared and Backconnect Rotating Proxies

How to Select your Search Engines and Website Sources to Scrape

How to Scrape your List of Websites

How to Configure the Speed of your Website Scraper and Data Extractor

How to Configure your Domain Filters and Website Blacklists

How to Configure your Content Filters

How to Configure the Save Location and Facebook Business Page Scraper

How to Configure Main Website Scraper and E-Mail Extractor Options

google maps scraper and email extractor

How to use the Google Maps Email Extractor and Google Maps Scraper

GOOGLE MAPS SCRAPER AND EMAIL EXTRACTOR

How to Use the Yellow Pages Scraper Data Extraction Software

Post-Scraping File Processing

How to Clean your Emails Using Email List Cleaner

Split a CSV File into Multiple Files

How to Scrape on top of Existing Database

It is very important that you read the guide very carefully in order to learn how to use the software properly.

If you have any questions, please drop us a line via email.

Support

For support questions, please contact us , add us on skype and join our forum where you can post your questions and get support from our developers and community.

Change log - See What's New!

Click here to view the entire change log.

System and Hardware Requirements

The software only runs on Window machines. You will need to have at least 4GB of ram and a decent processor. You can also use the web scraper with Windows VPSs and dedicated servers. The software is compatible with most VPN services. If you are going for HMA VPN PRO! you will need to get the previous version that supports auto IP changes.

Terms and Conditions

Please ensure that you are familiar with our terms and conditions and end user licence agreement. One licence key will entitle you to run the website scraper on a single PC at any one time. You must not share your licence key with anyone. It is your responsibility to learn how the software works and to make sure that you get all the additional services (i.e. proxies, captcha solving balance top up, XEvil, etc.). It is your responsibility to comply with your local laws and regulations.

Recommended Suppliers

Windows VPSs - https://hashcell.com

Proxies - Storm Proxies

VPN Software - https://www.hidemyass.com

Captcha Solving Service - https://2captcha.com

XEvil by Botmaster Labs - http://www.botmasterlabs.net